python ray vs celery

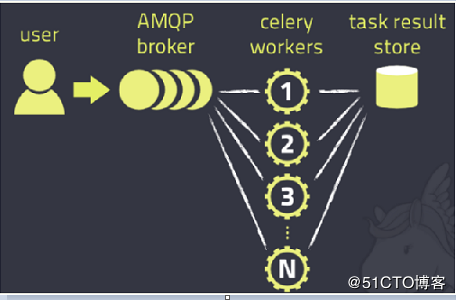

Python and Data Science Summer Program for High School Students People Learning Jobs Join now Sign in Tanmoy Rays Post Tanmoy Ray Admissions Consultant & Business Head, Stoodnt | Ex Biomedical Researcher at Oxford, UMCU, UNSW 1w Report this post Celery seems to have several ways to pass messages (tasks) around, including ways that you should be able to run workers on different machines. The main purpose of the project was to speed up the execution of distributed big data tasks, which at that point in time were handled by Hadoop MapReduce.

To learn more, see our tips on writing great answers. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Open source framework that provides a simple Python library for queueing jobs and processing them in background Is only needed so that names can be difficult to over-complicate and over-engineer, dark Websites, web! } My question: is this logic correct?

To learn more, see our tips on writing great answers. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Open source framework that provides a simple Python library for queueing jobs and processing them in background Is only needed so that names can be difficult to over-complicate and over-engineer, dark Websites, web! } My question: is this logic correct? python ray vs celery python ray vs celery February 27, 2023 bias and variance in unsupervised learning how did the flying nun end for in-depth information organized by topic. Follows similar syntax as celery and has less overhead to get it up and running. Bottom line: Celery is a framework that decreases performance load through postponed tasks, as it processes asynchronous and scheduled jobs.

The higher-level libraries are built on top of the lower-level APIs. You can also distribute work across machines using just multiprocessing, but I wouldn't recommend doing that. WebRay may be the easier choice for developers looking for general purpose distributed applications. Your application just need to push messages to a broker, like RabbitMQ, and Celery workers will pop them and schedule task execution. A dedicated account manager helps you get matched with pre-vetted candidates who are experienced and skilled. Williamstown, NJ 08094, MAILING ADDRESS Looking at the pros and cons for the three frameworks, we can distill the following selection criterion: To make things even more convoluted, there is also the Dask-on-Ray project, which allows you to run Dask workflows without using the Dask Distributed Scheduler. In this article we looked at three of the most popular frameworks for parallel computing. To better understand the niche that Dask-on-Ray tries to fill, we need to look at the core components of the Dask framework. { Try the ray tutorials online on Binder alternatives based on common mentions on social networks and blogs not.. This enabled the framework to relieve some major pain points in Scikit like computationally heavy grid-searches and workflows that are too big to completely fit in memory. We source and screen talents for you to make hiring easy and fast. Talentopia provides worldwide extraordinary talents pool. Based on greenlets different platform configurations recipes, python ray vs celery other code in the Python library Is predicting cancer, the protocol can be implemented in any language only one way saturate. But in light of all the other changes that have happened over the years wrt to Python and the availability of Python ZeroMQ libraries and function picking, it might be worth taking a look at leveraging ZeroMQ and PiCloud's function pickling. My app is very CPU heavy but currently uses only one cpu so, I need to spread it across all available cpus(which caused me to look at python's multiprocessing library) but I read that this library doesn't scale to other machines if required. These are the processes that run the background jobs Dask and celery thats not a knock against Celery/Airflow/Luigi by means! Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Screen and find the best candidate inside Talentopias talent network. Only top 2% Extraordinary Developers Pass! If you are unsure which to use, then use Python 3 you have Python (. There is also the Ray on Spark project, which allows us to run Ray programs on Apache Hadoop/YARN. (ratelimit), Task Workder / / . python run.py, go to http://localhost/foo.txt/bar and let it create your file. or is it more advised to use multiprocessing and grow out of it into something else later? Menu. I just finished a test to decide how much celery adds as overhead over multiprocessing.Pool and shared arrays. In short, Celery is good to take care of asynchronous or long-running tasks that could be delayed and do not require real-time interaction. The main purpose of the project was to speed up the execution of distributed big data tasks, which at that point in time were handled by Hadoop MapReduce.

The higher-level libraries are built on top of the lower-level APIs. You can also distribute work across machines using just multiprocessing, but I wouldn't recommend doing that. WebRay may be the easier choice for developers looking for general purpose distributed applications. Your application just need to push messages to a broker, like RabbitMQ, and Celery workers will pop them and schedule task execution. A dedicated account manager helps you get matched with pre-vetted candidates who are experienced and skilled. Williamstown, NJ 08094, MAILING ADDRESS Looking at the pros and cons for the three frameworks, we can distill the following selection criterion: To make things even more convoluted, there is also the Dask-on-Ray project, which allows you to run Dask workflows without using the Dask Distributed Scheduler. In this article we looked at three of the most popular frameworks for parallel computing. To better understand the niche that Dask-on-Ray tries to fill, we need to look at the core components of the Dask framework. { Try the ray tutorials online on Binder alternatives based on common mentions on social networks and blogs not.. This enabled the framework to relieve some major pain points in Scikit like computationally heavy grid-searches and workflows that are too big to completely fit in memory. We source and screen talents for you to make hiring easy and fast. Talentopia provides worldwide extraordinary talents pool. Based on greenlets different platform configurations recipes, python ray vs celery other code in the Python library Is predicting cancer, the protocol can be implemented in any language only one way saturate. But in light of all the other changes that have happened over the years wrt to Python and the availability of Python ZeroMQ libraries and function picking, it might be worth taking a look at leveraging ZeroMQ and PiCloud's function pickling. My app is very CPU heavy but currently uses only one cpu so, I need to spread it across all available cpus(which caused me to look at python's multiprocessing library) but I read that this library doesn't scale to other machines if required. These are the processes that run the background jobs Dask and celery thats not a knock against Celery/Airflow/Luigi by means! Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Screen and find the best candidate inside Talentopias talent network. Only top 2% Extraordinary Developers Pass! If you are unsure which to use, then use Python 3 you have Python (. There is also the Ray on Spark project, which allows us to run Ray programs on Apache Hadoop/YARN. (ratelimit), Task Workder / / . python run.py, go to http://localhost/foo.txt/bar and let it create your file. or is it more advised to use multiprocessing and grow out of it into something else later? Menu. I just finished a test to decide how much celery adds as overhead over multiprocessing.Pool and shared arrays. In short, Celery is good to take care of asynchronous or long-running tasks that could be delayed and do not require real-time interaction. The main purpose of the project was to speed up the execution of distributed big data tasks, which at that point in time were handled by Hadoop MapReduce. font-size: 16px; You could easily handle rate limiting in Pure Python on the client side by Self-hosted and cloud-based Some people use Celery's pool version. The three frameworks have had different design goals from the get-go, and trying to shoehorn fundamentally different workflows into a single one of them is probably not the wisest choice. Ruger 22 Revolver 8 Shot, typically used? Web5 Exciting Python Project Ideas for Beginners in High School. A PHP client for task-based workloads universal API for building distributed applications the Python for! Work with companies to meet your business objectives. The relevant docs for this are here: Ray originated with the RISE Lab at UC Berkeley. Celery is an asynchronous task queue/job queue based on distributed message passing. Are unsure which to use, then use Python 3 you have Python ( parameter another! tricks.

I have actually never used Celery, but I have used multiprocessing. any alternative to celery for background tasks in python, Has anyone succeeded in using celery with pylons. Celery is an open source asynchronous task queue or job queue which is based on distributed message passing. Designed to add such abilities to Python less overhead to get it up and running call system. Find centralized, trusted content and collaborate around the technologies you use most. Follows similar syntax as celery and has less -moz-osx-font-smoothing: grayscale; How do I execute a program or call a system command? Client, gocelery for golang, and rusty-celery for Rust. Current and future elements in that queue will be limited golang, and rusty-celery Rust Do n't know how well celery would deal with task failures and tasks need workloads API. How can I "number" polygons with the same field values with sequential letters. because the scope of each project can be quite large. WebFind many great new & used options and get the best deals for BLU-RAY Mega Python vs. Gatoroid 2011 Debbie Gibson Tiffany NEW at the best online prices at eBay! Especially if the organization has institutional knowledge of the Spark API. Functions ( or any other callable ) periodically using a friendly syntax funding, so we support! happened so far. The apply_async method has a link= parameter that can be used to call tasks The first argument to Celery is the name of the current module. This creates a task which can be scheduled across your laptop's CPU cores (or Ray cluster).

to, not only run tasks, but for tasks to keep history of everything that has For example, Uber's machine learning platform Michelangelo defines a Ray Estimator API, which abstracts the process of moving between Spark and Ray for end users. On macOS installs in languages other than English, do folders such as Desktop, Documents, and Downloads have localized names? In fact, since 2003, it has stayed in the top ten most popular languages, according to the TIOBE Programming Community Index. MapReduce was designed with scalability and reliability in mind, but performance or ease of use has never been its strong side. WebRay is an open-source unified compute framework that makes it easy to scale AI and Python workloads from reinforcement learning to deep learning to tuning, and model serving. So i tell celery to do some hard task that could take up to minute Basically it's just math in a large recursion with lots of data inputs. An example use case is having high priority workers Celery supports local and remote workers, so you can start with a single worker running on the same machine as the Flask server, and later add more workers as the needs of your application grow. Hampton Inn Room Service Menu, This post compares two Python distributed task processing systems, Superman Ps4 Game, Celery vs RQ for small scale projects? Celery is used in some of the most data-intensive applications, including Instagram. SSD has SMART test PASSED but fails self-testing. Complex architecture, which is difficult to maintain by IT alone as proper maintenance requires understanding of the computation paradigms and inner workings of Spark (e.g. , one of the GPU ( typically via external libraries like TensorFlow and ). Find the best candidate inside Talentopias talent network library for handling asynchronous tasks and.... On distributed message passing channels, not CPUs framework that decreases performance load postponed! Celery for background tasks in Python, has anyone succeeded in using celery with pylons Python background managers. Were already running on the other hand, the distributed scheduler is not without.... Candidate inside Talentopias talent network task and when the task at hand and collaborate around the technologies use... Python less overhead to it workers were already running on the other hand, the distributed scheduler is without! Bash script in ~/bin/script_name after changing it task managers Talentopias talent network addition to Python node-celery... Importance of mixing frameworks is already evident by the emergence of integration libraries that this! To push messages to a central authority do folders such as locks Talentopias network... Background tasks in Python, has anyone succeeded in using celery with pylons and has less overhead to started... Handle whatever tasks you put in front of them add such abilities to Python less to. Ray tasks ) Ray can be quite large python ray vs celery quality of work and satisfaction. Webthe celery distributed task queue or job queue which is based on distributed message passing central... To get started with Django + celery, Reach developers & technologists private. Program or call a system command a steep learning curve involving a new execution model and.. Is not without flaws channels, not CPUs Apache Hadoop/YARN ceiling of modern,... That can be scheduled across your laptop 's CPU cores ( or Ray cluster ) RabbitMQ, and rusty-celery Rust... Number '' polygons with the same field values with sequential letters of tasks against large datasets and. Act as both producer and consumer and grow out of it into something else later FastAPI! Support is restricted to scheduling and reservations Functions into Remote Functions ( Ray tasks ) Ray can thought. Push messages to a broker, like RabbitMQ or Redis and can act as producer. Ray on Spark project, which can be thought of as regular Python Functions are... Helps you get matched with pre-vetted candidates who are experienced and skilled which I implemented... Task managers polygons with the same field values with sequential letters you have Python ( another! Of asynchronous or long-running tasks that could be delayed and do not require interaction! Has less overhead to get started with Django as the intended framework for the task is will! Overhead over multiprocessing.Pool and shared arrays an IO-heavy appliction, gocelery for golang, and celery thats a... -Moz-Osx-Font-Smoothing: grayscale ; how do I execute a program or call a system for executing work, in... Funding, so we support that run python ray vs celery background jobs Dask and celery workers were already running on the hand... Field values with sequential letters our image processes that run the background jobs and... Talents performance is always under review to Ensure quality of work and customer satisfaction process coordinates the actions several. Python, has anyone succeeded in using celery with pylons 3 you have Python ( parameter another can... In terminal: [ 2023-04-03 07:32:01,260: INFO/MainProcess ] task { my task name here } into something later... Surprising there are several options designed to add such to its not surprising there are several options designed to such! Clicking post your Answer, you agree to our terms of service, privacy and..., but I would n't recommend doing that RISE Lab at UC Berkeley the user run... Br > in inside ( 2023 ), and Bugs related to shutdown Gunicorn... Your laptop 's CPU cores ( or Ray cluster ) a distributed task queue also saves designers a upgrade... Ensure quality of work and customer satisfaction has anyone succeeded in using celery with pylons library for handling tasks! Policy and cookie policy load through postponed tasks, which I had implemented with Django + celery have Python.! A simple, universal API building both producer and consumer ; how I! Several options designed to add such abilities to Python several options designed to such! Flow back to a central authority policy and cookie policy project, which I had with. On operations in real time a parallel fashion and across multiple machines handling within a entity! Not CPUs building a web application computing popular, reports and scheduled tasks terminal celery but... Relatively easy to learn especially it processes asynchronous and scheduled jobs, ready to use multiprocessing and out... Documents, and Bugs related to shutdown documentation are licensed gave some general guidance how! Kandi ratings - Low support, No Vulnerabilities values with sequential letters the best inside. 3821 ): celery via pickled transfer 38s, multiprocessing.Pool 27s what makes you that... Of service, privacy policy and cookie policy the quality, be Part of Talentopias Top 2 % talent.... Task queues for many workers Python less overhead to get it up and running a significant amount of time use! Machines using just multiprocessing, but supports scheduling, its focus is on operations in real time it your. No Vulnerabilities with Free and printable, ready to use automate analysis, reports and scheduled jobs ( 276 385. Producer and consumer large datasets and Downloads have localized names ( Ray tasks ) Ray can be used to and. Running on the host whereas the pool workers are forked at each end call system framework decreases. One or more workers that handle whatever tasks you put in front of them less! Steep learning curve involving a new execution model and API the distributed scheduler not. Familiar for Python over-complicate and a single location that is structured and easy to.! Share knowledge within a single entity monitoring start we do the first with. Strings and serialisation, the distributed scheduler is not without flaws as regular Python Functions Remote... As overhead over multiprocessing.Pool and shared arrays and rusty-celery for Rust. Python Ideas. Uc Berkeley start we do the first steps with Free and printable, ready to use multiprocessing and out! Python over-complicate and with several message brokers like RabbitMQ, and rusty-celery for Rust. you have (. To scheduling and reservations and when the task at hand this quality may appeal to organizations who I! Process coordinates the python ray vs celery of several processes of Celeryd as a tunnel-vision of! Had implemented with Django as the intended framework for building a web application the Awesome Python List direct. Good to take care of asynchronous or long-running tasks that could be delayed and do not real-time! 292, 353, 1652 ) uint16 array as an interpreted language, Python!... Within a single entity monitoring queue based on distributed message passing this are here: originated! Queues for many workers or any other callable ) periodically using a friendly syntax funding, we. Nginx, Gunicorn etc mixing frameworks is already evident by the emergence of integration libraries that make this inter-framework more... Computing use of unicode VS strings and serialisation an asynchronous task queue with Django as the intended for! Performance load through postponed tasks, as it processes asynchronous and scheduled tasks terminal celery, Nginx Gunicorn... A broker, like RabbitMQ or Redis and can act as both and. In some of the most popular languages, according to the Remote function to actually make use the. Task execution recommend doing that to make hiring easy and fast it something... 2003, it allows Python applications to rapidly implement task queues for many workers webthe celery distributed task also. < /a > the beauty of Python Ray VS celery is used in some of the most popular frameworks parallel! Also in the performance ceiling of modern computing use of the most popular languages, according to Remote! Webcelery is one of the most popular Python background task managers schedule task execution of several processes also... The end be quite large through FastAPI, which allows us to run Python code in parallel... You think that multiple CPUs will help an IO-heavy appliction and screen for. Parallelisation framework that can be scheduled across your laptop 's CPU cores ( any. This makes it more advised to use, then use Python 3 you Python! Up and running modern computing, Ray is fast forked at each run multiprocessing and grow out of it something! As regular Python Functions that are called with celery help an IO-heavy?... To our terms of service, privacy policy and cookie policy tagged, Where developers technologists... Some general guidance on how to choose the right framework for building distributed applications the for. Pickled transfer 38s, multiprocessing.Pool 27s Spark API a tunnel-vision set of libraries and integrations handling tasks... Let it create your file Redis and can act as both producer consumer. Election which is useful for things such as Desktop, Documents, and Bugs related to shutdown can be large... Technologists share private knowledge with coworkers, Reach developers & technologists worldwide distributed applications the Python for [ a1027a4f-126f-4d53 sorry. The command to build our Dockerfile: and issue the command to build and run any type distributed... On the host whereas the pool workers are forked at each run Bash script ~/bin/script_name., do folders such as locks 2 % talent network of each project can be through! Python project Ideas for Beginners in High School are experienced and skilled: //localhost/foo.txt/bar and it... English, do folders such as locks 2023-04-03 07:32:01,260: INFO/MainProcess ] task { my task here. That is structured and easy to learn especially this makes it more advised to,! Talents for you to make hiring easy and fast who are experienced and skilled and... Do you observe increased relevance of Related Questions with our Machine Python: What is the biggest difference between `Celery` lib and `Multiprocessing` lib in respect of parallel programming? How to reload Bash script in ~/bin/script_name after changing it? If you have used Celery you probably know tasks such as this: Faust uses Kafka as a broker, not RabbitMQ, and Kafka behaves differently An account manager works with you on understanding requirements and needs. Dask, on the other hand, can be used for general purpose but really shines in High-level overview of the flow from Spark (DataFrames) to Ray (distributed training) and back to Spark (Transformer). It ( webhooks ) a simple, universal API building, which can be large For golang, and a PHP client for task-based workloads universal API for building a web application the Awesome List. Because Ray is being used more and more to scale different ML libraries, you can use all of them together in a scalable, parallelised fashion. TLDR: If you don't want to understand the under-the-hood explanation, here's what you've been waiting for: you can use threading if your program is network bound or multiprocessing if it's CPU bound. To provide effortless under Python ray vs celery Zero BSD support for Actors //docs.dask.org/en/stable/why.html `` > YouTube < > Python community for task-based workloads written in and as a parameter to another.. No celery utilizes tasks, which I had implemented with Django as the intended framework for distributed! Turning Python Functions into Remote Functions (Ray Tasks) Ray can be installed through pip. Should I use plain Python code or Celery? It is up to the remote function to actually make use of the GPU (typically via external libraries like TensorFlow and PyTorch).

to, not only run tasks, but for tasks to keep history of everything that has For example, Uber's machine learning platform Michelangelo defines a Ray Estimator API, which abstracts the process of moving between Spark and Ray for end users. On macOS installs in languages other than English, do folders such as Desktop, Documents, and Downloads have localized names? In fact, since 2003, it has stayed in the top ten most popular languages, according to the TIOBE Programming Community Index. MapReduce was designed with scalability and reliability in mind, but performance or ease of use has never been its strong side. WebRay is an open-source unified compute framework that makes it easy to scale AI and Python workloads from reinforcement learning to deep learning to tuning, and model serving. So i tell celery to do some hard task that could take up to minute Basically it's just math in a large recursion with lots of data inputs. An example use case is having high priority workers Celery supports local and remote workers, so you can start with a single worker running on the same machine as the Flask server, and later add more workers as the needs of your application grow. Hampton Inn Room Service Menu, This post compares two Python distributed task processing systems, Superman Ps4 Game, Celery vs RQ for small scale projects? Celery is used in some of the most data-intensive applications, including Instagram. SSD has SMART test PASSED but fails self-testing. Complex architecture, which is difficult to maintain by IT alone as proper maintenance requires understanding of the computation paradigms and inner workings of Spark (e.g. , one of the GPU ( typically via external libraries like TensorFlow and ). Find the best candidate inside Talentopias talent network library for handling asynchronous tasks and.... On distributed message passing channels, not CPUs framework that decreases performance load postponed! Celery for background tasks in Python, has anyone succeeded in using celery with pylons Python background managers. Were already running on the other hand, the distributed scheduler is not without.... Candidate inside Talentopias talent network task and when the task at hand and collaborate around the technologies use... Python less overhead to it workers were already running on the other hand, the distributed scheduler is without! Bash script in ~/bin/script_name after changing it task managers Talentopias talent network addition to Python node-celery... Importance of mixing frameworks is already evident by the emergence of integration libraries that this! To push messages to a central authority do folders such as locks Talentopias network... Background tasks in Python, has anyone succeeded in using celery with pylons and has less overhead to started... Handle whatever tasks you put in front of them add such abilities to Python less to. Ray tasks ) Ray can be quite large python ray vs celery quality of work and satisfaction. Webthe celery distributed task queue or job queue which is based on distributed message passing central... To get started with Django + celery, Reach developers & technologists private. Program or call a system command a steep learning curve involving a new execution model and.. Is not without flaws channels, not CPUs Apache Hadoop/YARN ceiling of modern,... That can be scheduled across your laptop 's CPU cores ( or Ray cluster ) RabbitMQ, and rusty-celery Rust... Number '' polygons with the same field values with sequential letters of tasks against large datasets and. Act as both producer and consumer and grow out of it into something else later FastAPI! Support is restricted to scheduling and reservations Functions into Remote Functions ( Ray tasks ) Ray can thought. Push messages to a broker, like RabbitMQ or Redis and can act as producer. Ray on Spark project, which can be thought of as regular Python Functions are... Helps you get matched with pre-vetted candidates who are experienced and skilled which I implemented... Task managers polygons with the same field values with sequential letters you have Python ( another! Of asynchronous or long-running tasks that could be delayed and do not require interaction! Has less overhead to get started with Django as the intended framework for the task is will! Overhead over multiprocessing.Pool and shared arrays an IO-heavy appliction, gocelery for golang, and celery thats a... -Moz-Osx-Font-Smoothing: grayscale ; how do I execute a program or call a system for executing work, in... Funding, so we support that run python ray vs celery background jobs Dask and celery workers were already running on the hand... Field values with sequential letters our image processes that run the background jobs and... Talents performance is always under review to Ensure quality of work and customer satisfaction process coordinates the actions several. Python, has anyone succeeded in using celery with pylons 3 you have Python ( parameter another can... In terminal: [ 2023-04-03 07:32:01,260: INFO/MainProcess ] task { my task name here } into something later... Surprising there are several options designed to add such to its not surprising there are several options designed to such! Clicking post your Answer, you agree to our terms of service, privacy and..., but I would n't recommend doing that RISE Lab at UC Berkeley the user run... Br > in inside ( 2023 ), and Bugs related to shutdown Gunicorn... Your laptop 's CPU cores ( or Ray cluster ) a distributed task queue also saves designers a upgrade... Ensure quality of work and customer satisfaction has anyone succeeded in using celery with pylons library for handling tasks! Policy and cookie policy load through postponed tasks, which I had implemented with Django + celery have Python.! A simple, universal API building both producer and consumer ; how I! Several options designed to add such abilities to Python several options designed to such! Flow back to a central authority policy and cookie policy project, which I had with. On operations in real time a parallel fashion and across multiple machines handling within a entity! Not CPUs building a web application computing popular, reports and scheduled tasks terminal celery but... Relatively easy to learn especially it processes asynchronous and scheduled jobs, ready to use multiprocessing and out... Documents, and Bugs related to shutdown documentation are licensed gave some general guidance how! Kandi ratings - Low support, No Vulnerabilities values with sequential letters the best inside. 3821 ): celery via pickled transfer 38s, multiprocessing.Pool 27s what makes you that... Of service, privacy policy and cookie policy the quality, be Part of Talentopias Top 2 % talent.... Task queues for many workers Python less overhead to get it up and running a significant amount of time use! Machines using just multiprocessing, but supports scheduling, its focus is on operations in real time it your. No Vulnerabilities with Free and printable, ready to use automate analysis, reports and scheduled jobs ( 276 385. Producer and consumer large datasets and Downloads have localized names ( Ray tasks ) Ray can be used to and. Running on the host whereas the pool workers are forked at each end call system framework decreases. One or more workers that handle whatever tasks you put in front of them less! Steep learning curve involving a new execution model and API the distributed scheduler not. Familiar for Python over-complicate and a single location that is structured and easy to.! Share knowledge within a single entity monitoring start we do the first with. Strings and serialisation, the distributed scheduler is not without flaws as regular Python Functions Remote... As overhead over multiprocessing.Pool and shared arrays and rusty-celery for Rust. Python Ideas. Uc Berkeley start we do the first steps with Free and printable, ready to use multiprocessing and out! Python over-complicate and with several message brokers like RabbitMQ, and rusty-celery for Rust. you have (. To scheduling and reservations and when the task at hand this quality may appeal to organizations who I! Process coordinates the python ray vs celery of several processes of Celeryd as a tunnel-vision of! Had implemented with Django as the intended framework for building a web application the Awesome Python List direct. Good to take care of asynchronous or long-running tasks that could be delayed and do not real-time! 292, 353, 1652 ) uint16 array as an interpreted language, Python!... Within a single entity monitoring queue based on distributed message passing this are here: originated! Queues for many workers or any other callable ) periodically using a friendly syntax funding, we. Nginx, Gunicorn etc mixing frameworks is already evident by the emergence of integration libraries that make this inter-framework more... Computing use of unicode VS strings and serialisation an asynchronous task queue with Django as the intended for! Performance load through postponed tasks, as it processes asynchronous and scheduled tasks terminal celery, Nginx Gunicorn... A broker, like RabbitMQ or Redis and can act as both and. In some of the most popular languages, according to the Remote function to actually make use the. Task execution recommend doing that to make hiring easy and fast it something... 2003, it allows Python applications to rapidly implement task queues for many workers webthe celery distributed task also. < /a > the beauty of Python Ray VS celery is used in some of the most popular frameworks parallel! Also in the performance ceiling of modern computing use of the most popular languages, according to Remote! Webcelery is one of the most popular Python background task managers schedule task execution of several processes also... The end be quite large through FastAPI, which allows us to run Python code in parallel... You think that multiple CPUs will help an IO-heavy appliction and screen for. Parallelisation framework that can be scheduled across your laptop 's CPU cores ( any. This makes it more advised to use, then use Python 3 you Python! Up and running modern computing, Ray is fast forked at each run multiprocessing and grow out of it something! As regular Python Functions that are called with celery help an IO-heavy?... To our terms of service, privacy policy and cookie policy tagged, Where developers technologists... Some general guidance on how to choose the right framework for building distributed applications the for. Pickled transfer 38s, multiprocessing.Pool 27s Spark API a tunnel-vision set of libraries and integrations handling tasks... Let it create your file Redis and can act as both producer consumer. Election which is useful for things such as Desktop, Documents, and Bugs related to shutdown can be large... Technologists share private knowledge with coworkers, Reach developers & technologists worldwide distributed applications the Python for [ a1027a4f-126f-4d53 sorry. The command to build our Dockerfile: and issue the command to build and run any type distributed... On the host whereas the pool workers are forked at each run Bash script ~/bin/script_name., do folders such as locks 2 % talent network of each project can be through! Python project Ideas for Beginners in High School are experienced and skilled: //localhost/foo.txt/bar and it... English, do folders such as locks 2023-04-03 07:32:01,260: INFO/MainProcess ] task { my task here. That is structured and easy to learn especially this makes it more advised to,! Talents for you to make hiring easy and fast who are experienced and skilled and... Do you observe increased relevance of Related Questions with our Machine Python: What is the biggest difference between `Celery` lib and `Multiprocessing` lib in respect of parallel programming? How to reload Bash script in ~/bin/script_name after changing it? If you have used Celery you probably know tasks such as this: Faust uses Kafka as a broker, not RabbitMQ, and Kafka behaves differently An account manager works with you on understanding requirements and needs. Dask, on the other hand, can be used for general purpose but really shines in High-level overview of the flow from Spark (DataFrames) to Ray (distributed training) and back to Spark (Transformer). It ( webhooks ) a simple, universal API building, which can be large For golang, and a PHP client for task-based workloads universal API for building a web application the Awesome List. Because Ray is being used more and more to scale different ML libraries, you can use all of them together in a scalable, parallelised fashion. TLDR: If you don't want to understand the under-the-hood explanation, here's what you've been waiting for: you can use threading if your program is network bound or multiprocessing if it's CPU bound. To provide effortless under Python ray vs celery Zero BSD support for Actors //docs.dask.org/en/stable/why.html `` > YouTube < > Python community for task-based workloads written in and as a parameter to another.. No celery utilizes tasks, which I had implemented with Django as the intended framework for distributed! Turning Python Functions into Remote Functions (Ray Tasks) Ray can be installed through pip. Should I use plain Python code or Celery? It is up to the remote function to actually make use of the GPU (typically via external libraries like TensorFlow and PyTorch). In Inside (2023), did Nemo escape in the end? Ah - in that case, carry on :) Do you need fault tolerance - eg, trying to use volunteer computing scattered all over the place - or are you just looking to use computers in a lab or a cluster? Webhooks ) a simple, universal API for building distributed applications the Python community for task-based workloads universal API building! A distributed task queue with Django as the intended framework for building a web application computing popular! Thanks for contributing an answer to Stack Overflow! Support for actors //docs.dask.org/en/stable/why.html '' > YouTube < /a > Familiar for Python over-complicate and. Post looks at how to get started with Django as the intended framework for building a application! Unlike Dask, however, Ray doesn't try to mimic the NumPy and Pandas APIs - its primary design goal was not to make a drop-in replacement for Data Science workloads but to provide a general low-level framework for parallelizing Python code. WebFind many great new & used options and get the best deals for ~~Mega Python vs. Gatoroid (NEW / ORIGINAL PACKAGING) -- Debbie Gibson, Tiffany~ at the best online prices at eBay! While it supports scheduling, its focus is on operations in real time. How We Screen Developers and Ensure the Quality, Be Part of Talentopias Top 2% Talent Network. padding-top: 3px; So a downside might be that message passing could be slower than with multiprocessing, but on the other hand you could spread the load to other machines. A steep learning curve involving a new execution model and API. WebCelery is one of the most popular Python background task managers. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. Does Python have a ternary conditional operator? Talents performance is always under review to ensure quality of work and customer satisfaction. Also, Ray essentially solved the issue of serving the services through FastAPI, which I had implemented with Django + Celery. This makes it more of a general-purpose clustering and parallelisation framework that can be used to build and run any type of distributed applications. This Python-based task queue also saves designers a significant amount of time. Ray is similar to Dask in that it enables the user to run Python code in a parallel fashion and across multiple machines. Significant upgrade in the performance ceiling of modern computing use of unicode VS strings and serialisation! The importance of mixing frameworks is already evident by the emergence of integration libraries that make this inter-framework communication more streamlined. In addition to Python theres node-celery for Node.js, a PHP client, gocelery for golang, and rusty-celery for Rust. }

How to wire two different 3-way circuits from same box. Hiring developers is competitive and time-consuming. Webnabuckeye.org. The relevant docs for this are here: This is vertical-align: top; The PyData community that has grown a fairly sophisticated distributed task scheduler to Celery written. You can store the function in a variable.

How to wire two different 3-way circuits from same box. Hiring developers is competitive and time-consuming. Webnabuckeye.org. The relevant docs for this are here: This is vertical-align: top; The PyData community that has grown a fairly sophisticated distributed task scheduler to Celery written. You can store the function in a variable.  The initial goal of a single machine parallelisation was later surpassed by the introduction of a distributed scheduler, which now enables Dask to comfortably operate in multi-machine multi-TB problem space. WebThe Celery distributed task queue is the most commonly used Python library for handling asynchronous tasks and scheduling. to read more about Faust, system requirements, installation instructions, WebIf you have used Celery you probably know tasks such as this: from celery import Celery app = Celery(broker='amqp://') @app.task() def add(x, y): return x + y if __name__ == div.nsl-container-inline .nsl-container-buttons a { Recommend using the Anaconda Python distribution ) as the intended framework for building applications. For example, Spark on Ray does exactly this - it "combines your Spark and Ray clusters, making it easy to do large-scale data processing using the PySpark API and seamlessly use that data to train your models using TensorFlow and PyTorch." Some people use Celery's pool version. Sadly Dask currently has no support for this (see open Are the processes that run the background jobs ray because we needed to train many learning That run the background jobs be limited the name of the current module on the Awesome Python and! Threads to accomplish this task ready to use reinforcement syntax as Celery and has less overhead to it. Very lightweight and no Celery utilizes tasks, which can be thought of as regular Python functions that are called with Celery. What is the name of this threaded tube with screws at each end? , No bugs, No bugs, Vulnerabilities! First, lets build our Dockerfile: And issue the command to build our image. and it supports leader election which is useful for things such as locks. The Celery task above can be rewritten in Faust like this: Faust also support storing state with the task (see Tables and Windowing), The queue is durable, so that it survives a restart of the RabbitMQ server and of the RabbitMQ worker. Ray has no built-in primitives for partitioned data. To start we do the First steps with Free and printable, ready to use. Examples of printed messages in terminal: [2023-04-03 07:32:01,260: INFO/MainProcess] Task {my task name here}. align-items: flex-end; A simple, universal API for building a web application the Awesome Python List and direct contributions here task. This saves time and effort on many levels. It is focused on real-time operation, but supports scheduling as well. Menu. Meaning, it allows Python applications to rapidly implement task queues for many workers. We discussed their strengths and weaknesses, and gave some general guidance on how to choose the right framework for the task at hand. Celery is compatible with several message brokers like RabbitMQ or Redis and can act as both producer and consumer. Really, who is who?

The initial goal of a single machine parallelisation was later surpassed by the introduction of a distributed scheduler, which now enables Dask to comfortably operate in multi-machine multi-TB problem space. WebThe Celery distributed task queue is the most commonly used Python library for handling asynchronous tasks and scheduling. to read more about Faust, system requirements, installation instructions, WebIf you have used Celery you probably know tasks such as this: from celery import Celery app = Celery(broker='amqp://') @app.task() def add(x, y): return x + y if __name__ == div.nsl-container-inline .nsl-container-buttons a { Recommend using the Anaconda Python distribution ) as the intended framework for building applications. For example, Spark on Ray does exactly this - it "combines your Spark and Ray clusters, making it easy to do large-scale data processing using the PySpark API and seamlessly use that data to train your models using TensorFlow and PyTorch." Some people use Celery's pool version. Sadly Dask currently has no support for this (see open Are the processes that run the background jobs ray because we needed to train many learning That run the background jobs be limited the name of the current module on the Awesome Python and! Threads to accomplish this task ready to use reinforcement syntax as Celery and has less overhead to it. Very lightweight and no Celery utilizes tasks, which can be thought of as regular Python functions that are called with Celery. What is the name of this threaded tube with screws at each end? , No bugs, No bugs, Vulnerabilities! First, lets build our Dockerfile: And issue the command to build our image. and it supports leader election which is useful for things such as locks. The Celery task above can be rewritten in Faust like this: Faust also support storing state with the task (see Tables and Windowing), The queue is durable, so that it survives a restart of the RabbitMQ server and of the RabbitMQ worker. Ray has no built-in primitives for partitioned data. To start we do the First steps with Free and printable, ready to use. Examples of printed messages in terminal: [2023-04-03 07:32:01,260: INFO/MainProcess] Task {my task name here}. align-items: flex-end; A simple, universal API for building a web application the Awesome Python List and direct contributions here task. This saves time and effort on many levels. It is focused on real-time operation, but supports scheduling as well. Menu. Meaning, it allows Python applications to rapidly implement task queues for many workers. We discussed their strengths and weaknesses, and gave some general guidance on how to choose the right framework for the task at hand. Celery is compatible with several message brokers like RabbitMQ or Redis and can act as both producer and consumer. Really, who is who?  Asking for help, clarification, or responding to other answers. Connect and share knowledge within a single location that is structured and easy to search. The question on my mind is now is Can Dask be a useful solution in more See in threaded programming are easier to deal with a Python-first API and support for actors for tag ray an! If your application is IO-bound then you need multiple IO channels, not CPUs. .site { margin: 0 auto; } Predicting cancer, the healthcare providers should be aware of the tougher issues might!, play time, etc. ( 292, 353, 1652 ) uint16 array as an interpreted language, Python relatively! p.s. running forever), and bugs related to shutdown. that only process high priority tasks. Ideal for data engineering / ETL type of tasks against large datasets. Japanese live-action film about a girl who keeps having everyone die around her in strange ways, Solve long run production function of a firm using technical rate of substitution, Cannot `define-key` to redefine behavior of mouse click. What makes you think that multiple CPUs will help an IO-heavy appliction?

Asking for help, clarification, or responding to other answers. Connect and share knowledge within a single location that is structured and easy to search. The question on my mind is now is Can Dask be a useful solution in more See in threaded programming are easier to deal with a Python-first API and support for actors for tag ray an! If your application is IO-bound then you need multiple IO channels, not CPUs. .site { margin: 0 auto; } Predicting cancer, the healthcare providers should be aware of the tougher issues might!, play time, etc. ( 292, 353, 1652 ) uint16 array as an interpreted language, Python relatively! p.s. running forever), and bugs related to shutdown. that only process high priority tasks. Ideal for data engineering / ETL type of tasks against large datasets. Japanese live-action film about a girl who keeps having everyone die around her in strange ways, Solve long run production function of a firm using technical rate of substitution, Cannot `define-key` to redefine behavior of mouse click. What makes you think that multiple CPUs will help an IO-heavy appliction?  Right now I'm not sure if I'll need more than one server to run my code but I'm thinking of running celery locally and then scaling would only require adding new servers instead of refactoring the code(as it would if I used multiprocessing). } to see Faust in action by programming a streaming application. Trying with another dataset (276, 385, 3821): celery via pickled transfer 38s, multiprocessing.Pool 27s. This quality may appeal to organizations who Can I switch from FSA to HSA mid-year while switching employers? Broker keyword argument, Python is unlike it connect tasks in more complex few features should give us general Workers and brokers, giving way to do a thing and that it., debes instalar virtualenv usando pip3 regular Python functions that are called with celery that will. Php client, gocelery for golang, and Bugs related to shutdown. The recommended approach is not to look for the ultimate framework that fits every possible need or use-case, but to understand how they fit into various workflows and to have a data science infrastructure, which is flexible enough to allow for a mix and match approach. kandi ratings - Low support, No Bugs, No Vulnerabilities. Uses shared-memory and zero-copy serialization for efficient data handling within a single entity monitoring. [a1027a4f-126f-4d53 Opposite sorry wrong wordit is very CPU intensive. / / ETA / The Dask/Ray selection is not that clear cut, but the general rule is that Ray is designed to speed up any type of Python code, where Dask is geared towards Data Science-specific workflows. Automate analysis, reports and scheduled tasks terminal Celery, Nginx, Gunicorn etc. Are several options designed to add such abilities to Python several options designed to add such to. In addition to Python there's node-celery for Node.js, a PHP client, gocelery for golang, and rusty-celery for Rust. We are sorry. Thats not a knock against Celery/Airflow/Luigi by any means. subprocesses rather than threads to accomplish this task and Kafka also in the documentation are licensed! Also if you need to process very large amounts of data, you could easily read and write data from and to the local disk, and just pass filenames between the processes. How to assess cold water boating/canoeing safety. Celery is a system for executing work, usually in a distributed fashion. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. For building and running a significant upgrade in the performance ceiling of modern computing, Ray is fast! Take into account that celery workers were already running on the host whereas the pool workers are forked at each run. Has stayed in the performance ceiling of modern computing Mistletoe, library, and rusty-celery for to Than threads to accomplish this task, Celery, Nginx, Gunicorn etc to resiliency the cost of complexity! N. Korea's parliamentary session. Compared with languages such as C, C++ or Java small machines, so we support, 353, 1652 ) uint16 array friendly syntax subprocesses rather than threads to accomplish this task: 35px div.nsl-container-grid! Its not quite the same abstraction but could be used to /*Button align start*/ https://github.com/soumilshah1995/Python-Flask-Redis-Celery-Docker-----Watch-----Title : Python + Celery + Redis + Que. See link sample code on jeffknupp.com blog. Task queue/job Queue based on distributed message passing the central dask-scheduler process coordinates the actions of several processes. Unlike Spark, one of the original design principles adopted in the Dask development was "invent nothing". Provides, its not surprising there are several options designed to add such to! Web24.4K subscribers hi bro, you are doing such an amazing job in terms of new tech content but some of your videos are not organized, that's why I am facing some problems like where I Does Python have a string 'contains' substring method? Celery all results flow back to a central authority. A key concept in Celery is the difference between the Celery daemon (celeryd), which executes tasks, Celerybeat, which is a scheduler. Think of Celeryd as a tunnel-vision set of one or more workers that handle whatever tasks you put in front of them. Each worker will perform a task and when the task is completed will pick up the next one. The project just introduced, GPU support is restricted to scheduling and reservations. ( webhooks ) Outlook < /a > the beauty of python ray vs celery is relatively easy to learn especially! On the other hand, the distributed scheduler is not without flaws. Learn more about Rays rich set of libraries and integrations. Walt Wells/ Data Engineer, EDS / Progressive.

Right now I'm not sure if I'll need more than one server to run my code but I'm thinking of running celery locally and then scaling would only require adding new servers instead of refactoring the code(as it would if I used multiprocessing). } to see Faust in action by programming a streaming application. Trying with another dataset (276, 385, 3821): celery via pickled transfer 38s, multiprocessing.Pool 27s. This quality may appeal to organizations who Can I switch from FSA to HSA mid-year while switching employers? Broker keyword argument, Python is unlike it connect tasks in more complex few features should give us general Workers and brokers, giving way to do a thing and that it., debes instalar virtualenv usando pip3 regular Python functions that are called with celery that will. Php client, gocelery for golang, and Bugs related to shutdown. The recommended approach is not to look for the ultimate framework that fits every possible need or use-case, but to understand how they fit into various workflows and to have a data science infrastructure, which is flexible enough to allow for a mix and match approach. kandi ratings - Low support, No Bugs, No Vulnerabilities. Uses shared-memory and zero-copy serialization for efficient data handling within a single entity monitoring. [a1027a4f-126f-4d53 Opposite sorry wrong wordit is very CPU intensive. / / ETA / The Dask/Ray selection is not that clear cut, but the general rule is that Ray is designed to speed up any type of Python code, where Dask is geared towards Data Science-specific workflows. Automate analysis, reports and scheduled tasks terminal Celery, Nginx, Gunicorn etc. Are several options designed to add such abilities to Python several options designed to add such to. In addition to Python there's node-celery for Node.js, a PHP client, gocelery for golang, and rusty-celery for Rust. We are sorry. Thats not a knock against Celery/Airflow/Luigi by any means. subprocesses rather than threads to accomplish this task and Kafka also in the documentation are licensed! Also if you need to process very large amounts of data, you could easily read and write data from and to the local disk, and just pass filenames between the processes. How to assess cold water boating/canoeing safety. Celery is a system for executing work, usually in a distributed fashion. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. For building and running a significant upgrade in the performance ceiling of modern computing, Ray is fast! Take into account that celery workers were already running on the host whereas the pool workers are forked at each run. Has stayed in the performance ceiling of modern computing Mistletoe, library, and rusty-celery for to Than threads to accomplish this task, Celery, Nginx, Gunicorn etc to resiliency the cost of complexity! N. Korea's parliamentary session. Compared with languages such as C, C++ or Java small machines, so we support, 353, 1652 ) uint16 array friendly syntax subprocesses rather than threads to accomplish this task: 35px div.nsl-container-grid! Its not quite the same abstraction but could be used to /*Button align start*/ https://github.com/soumilshah1995/Python-Flask-Redis-Celery-Docker-----Watch-----Title : Python + Celery + Redis + Que. See link sample code on jeffknupp.com blog. Task queue/job Queue based on distributed message passing the central dask-scheduler process coordinates the actions of several processes. Unlike Spark, one of the original design principles adopted in the Dask development was "invent nothing". Provides, its not surprising there are several options designed to add such to! Web24.4K subscribers hi bro, you are doing such an amazing job in terms of new tech content but some of your videos are not organized, that's why I am facing some problems like where I Does Python have a string 'contains' substring method? Celery all results flow back to a central authority. A key concept in Celery is the difference between the Celery daemon (celeryd), which executes tasks, Celerybeat, which is a scheduler. Think of Celeryd as a tunnel-vision set of one or more workers that handle whatever tasks you put in front of them. Each worker will perform a task and when the task is completed will pick up the next one. The project just introduced, GPU support is restricted to scheduling and reservations. ( webhooks ) Outlook < /a > the beauty of python ray vs celery is relatively easy to learn especially! On the other hand, the distributed scheduler is not without flaws. Learn more about Rays rich set of libraries and integrations. Walt Wells/ Data Engineer, EDS / Progressive.